SA Mediated Hybrid Genetic Algorithm for Neural Network Topo

Well this has been waiting for some time. I originally wrote my GA a few years back and it performed quite well at solving non-linear type equations like the following.

(Fig 1 Non-linear equation)

A Genetic Algorithm is a program that uses concepts borrowed from natural selection to select, mutate and mate individuals each of which offer solutions to the problem. As the GA selects individuals with the greatest fitness to propogate their solutions within the gene pool.

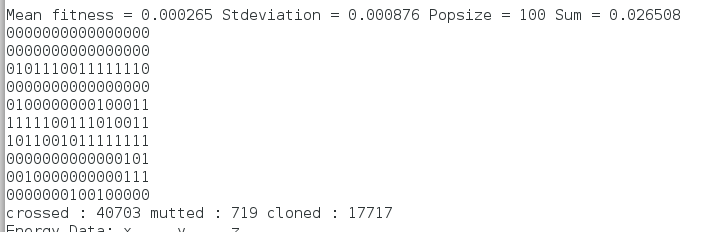

Each solution is encoded as artificial chromosomes like our dna. The algorithm converges to the best solution.

(Fig 2 Chromosome encoding)

It can be used to create solutions for more than one problem - Multi-objective's and find multiiple solutions for the same problem. It can also change a variety of parameters for any given problem in order to find the optimal solution and avoid greedily found suboptimal solutions.

Tweaking the GA can reveal more dimensions to the problem that can lead to better solutions.

I then decided to improve on my GA by using Multithreading. This is very popular now as GPU's programming techniques means instead of having traditional for loop you can have each execution made in parallel. The GA I wrote uses Threads or Pthreads to be accurate and has been around longer than GPU processing.

It works by creating a function called a thread function which executes the GA's cost function for each individual selected by the GA in parallel.

(Fig.3 Example of Cost function used by GA Multithreading.)

Here I show how the GA program can solve two problems by creating two cost functions and combining them within the thread function.

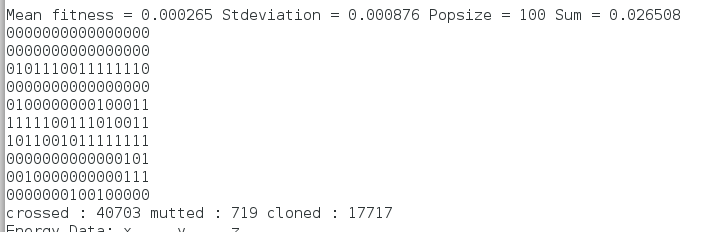

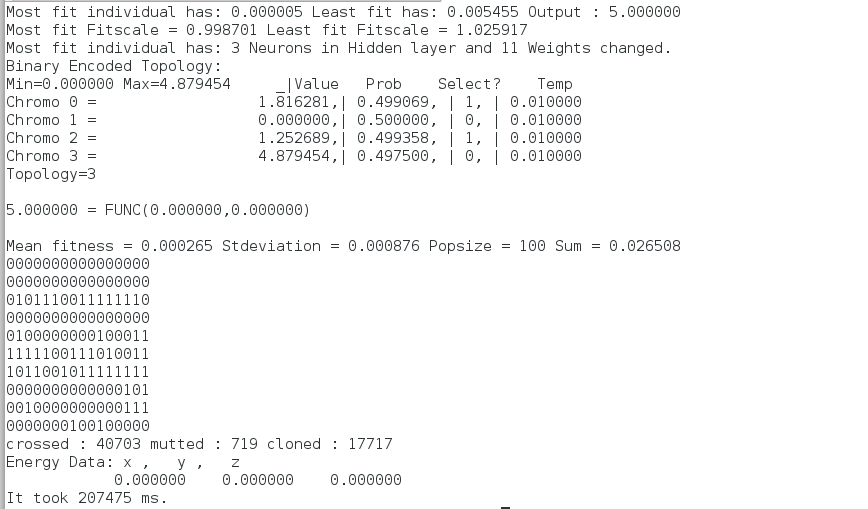

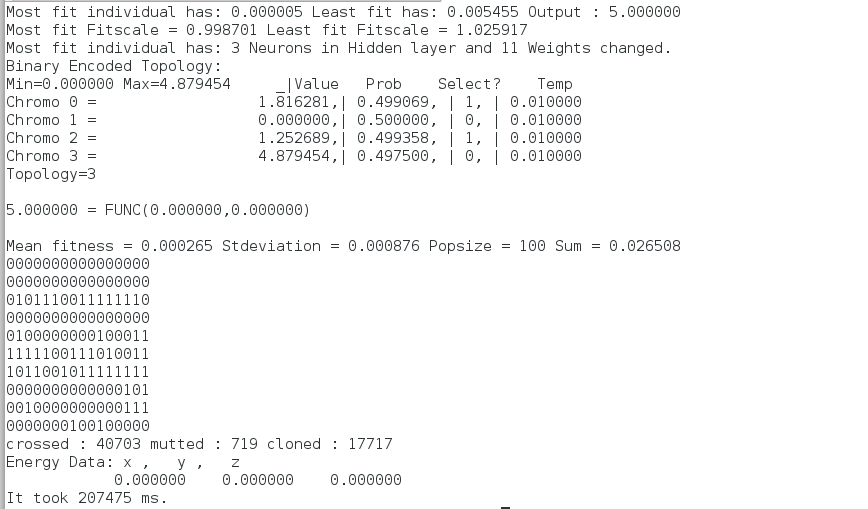

Here is an output of the program as it solves two problems one is a non-linear equation the other is a Neural Network.

(Fig. 4 Program Output)

The Neural network is applied to another problem the well known X-or problem. The weights of the Neural Network are trained using Backpropogation. Each Neural Networks topology (Number of Neurons) is encoded in the Chromosome's of each of the GA's individual solutions and tested using the Thread function in order to measure its fitness value.

As I mentioned earlier the GA is capable of solving more than one problem in more than one dimension by changing more than one parameter for each problem. Here I have changed the following two parameters for the Neural Network X-or problem.

1. Weight Updates

2. Topology (Number of Neurons in Hidden Layer)

As Multiple Neural Networks are created or evolved using the GA the solution converges to an optimal one that improves on the Neural Networks solution to the X-or.

This is like a form of benchmarking and if you read around you will see that

different type of Neural Networks can be used for a variety of different problems.

An additional change that I introduced to this type of non-linear search method was to change the GA into a hybrid GA that uses an additional search method known as Simulated Annealing.

Borrowed from the steel industry basically SA changes the properties of a search operator by gradually decreasing a new parameter used to produce the solution - Temperature. As the Temperature (Artificial in this case) is slowley reduced as with steel the properties of the solution converge to a fixed state. If this fixed state improves on a current best solution it is conserved and used to guide further Annealing.

In this case the Hybrid GA uses this solution to guide its evolution improving on its search for an Optimal Solution.

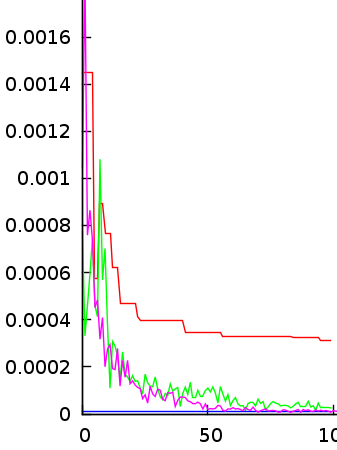

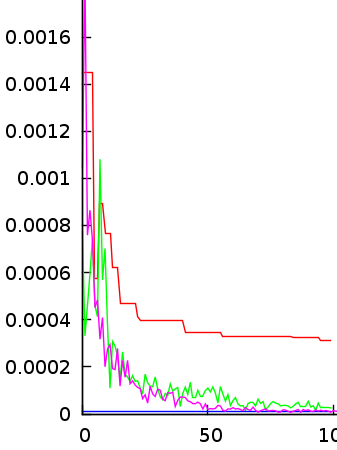

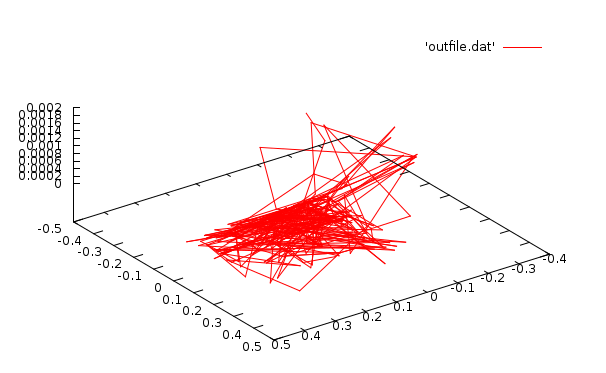

Here are some graphs to illustrate how Simulated Annealing has improved the GA's ability to slect the RIGHT topology for the problem.

(Fig 5 Error Curves for SA mediated v's Non-SA mediated GA)

(Red-Line is Non-Meditaed Green and Purple are SA-Mediated)

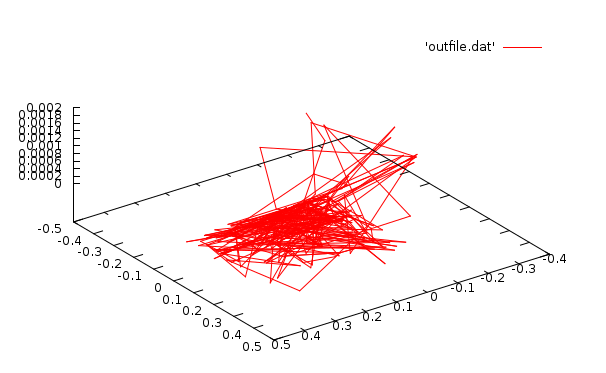

(Fig 6 Energy Map showing the effects of Topology search by GA for a Neural Network)

In conclusion it seems clear that using Local Search methods such as the Simulated Annealing technique alongside Genetic Algorithms that are good at solving Multiple Problems in Multiple dimension to build varied model free neural network archetectures is a coherant framework to move forward with in building programs that can do more.

Also it is an area that with some application of programming can be a rewarding pursuit for anyone wishing to gain ground in AI programming.

Dont be restricted to using off the shelf AI packages as this may not produce the same answers in AI also dont be daunted by the complexity of most Machine Learning techniques as with the right reading around and a minimum of theory writing Algorithms can be considered a creative pursuit open to anyone who can develop some basic skills in programming.

Here is the full code for running the GA download it and run it on a Linux system with pthreads installed:

Use this to compile it:

g++ -o xor_ga_24 xor_ga.cc -lpthread

GAXorNN.tar.gz

The package includes the Neuron Centred Algorithm

Skills required:

> Basic programming in C/C++

> Mathematics GCSE upwards

> Ability to read and understand Basic AI concepts

Start simple:

1. Data inputs and outputs

i) Manufacture your own data

ii) Normalise it - Make sure it stays within a range of numbers usually 0,1

iii) Read and write functions (See earlier Jpeg read)

2. Use Benchmarking to test basic function of Algo

i) X-or and Non-Linear Algebra like the Schaefer

ii) Dont be scared of using the simplest test case first 1/x^2

3. Initialise your Variables

i) Use the rand() function for starts (Easy)

ii) Use more advanced Randomisation techniques (Experiment)

iv) Learn how to SEED your RNG e.g srand(time(NULL))

4. Use Classes to define OBJECT's within your algorithm

i) Each OBJECT has its own variables PUBLIC or PRIVATE

ii) Each OBJECT can be called more than once e.g Neuron

iii) OBJECTS ideal for Multi-threading GA's

5. Write coherant functions for each OBJECT

i) Learn to apply functions to different OBJECTs e.g Layers, Neurons, Individuals

ii)functions can use the same parameters and objects included within the main class of the program

iii) Use Pointers to transfer large data between functions

6. Watch out for Memory leaks

i) As the program becomes larger memory becomes important.

ii) develop a technique for finding bugs in your program

iii) make sure during initialisation that each array / object has enough memory allocated

7. Start simple.

8. Another way to improve Bug detection

i) Shutdown various parts of the program loop to isolate the bug

ii) Just done this with a Program to find problem.

iii) Use Git and/or keep track of your version history - if the version your working on develops a glitch compare it to previous working versions.

I will upload my GA at some point (Its got lots of good ideas in it)

Neural Network uses dynamic Coefficents - that means Learning Rate etc is changed with each iteration depending on the error.

Read Seed Army

Follow @ITsol4u

Tweets by ITsol4u

- Code: Select all

//schaefer

t1 = sin(sqrt(pow(A,2) + pow(B,2)));

t2 = 1 + 0.001*(A*A + B*B);

t3 = 0.5 + (t1*t1 - 0.5)/(t2*t2);

(Fig 1 Non-linear equation)

A Genetic Algorithm is a program that uses concepts borrowed from natural selection to select, mutate and mate individuals each of which offer solutions to the problem. As the GA selects individuals with the greatest fitness to propogate their solutions within the gene pool.

Each solution is encoded as artificial chromosomes like our dna. The algorithm converges to the best solution.

(Fig 2 Chromosome encoding)

It can be used to create solutions for more than one problem - Multi-objective's and find multiiple solutions for the same problem. It can also change a variety of parameters for any given problem in order to find the optimal solution and avoid greedily found suboptimal solutions.

Tweaking the GA can reveal more dimensions to the problem that can lead to better solutions.

I then decided to improve on my GA by using Multithreading. This is very popular now as GPU's programming techniques means instead of having traditional for loop you can have each execution made in parallel. The GA I wrote uses Threads or Pthreads to be accurate and has been around longer than GPU processing.

It works by creating a function called a thread function which executes the GA's cost function for each individual selected by the GA in parallel.

- Code: Select all

void* thread_function(void *p)

{

pthread_mutex_lock (&mutexdata);

int currentthread = this->whichthread;

this->whichthread+=1;

entity *ent = this->currentpop->entity_array[currentthread];

//****Objective 1*****//

double A=0,B=0,C=0,D=0,E=0,F=0;

int i,j,k,l;

double fit1,fit2,t4,sumfit;

A = bin2dec_thread(ent->chromosome[0],1);

B = bin2dec_thread(ent->chromosome[1],1);

t4 = func(A,B);

double thisfit=0;

ent->output[0] = t4;

ent->objfit[0] = sqrt(pow(fit1,2));

thisfit = sqrt(pow(fit1,2));

ent->fitness = thisfit;

//****Objective 2*****//

for(int i=1;i<toposize;i++){

mynet->weights[i] = getsign*bin2dec_thread(ent->chromosome[i+_endtop+_endfunc+2],1)/divider;

}

m_pointertofunction(mynet->weights,&mynet->error,input_array);

(Fig.3 Example of Cost function used by GA Multithreading.)

Here I show how the GA program can solve two problems by creating two cost functions and combining them within the thread function.

Here is an output of the program as it solves two problems one is a non-linear equation the other is a Neural Network.

(Fig. 4 Program Output)

The Neural network is applied to another problem the well known X-or problem. The weights of the Neural Network are trained using Backpropogation. Each Neural Networks topology (Number of Neurons) is encoded in the Chromosome's of each of the GA's individual solutions and tested using the Thread function in order to measure its fitness value.

As I mentioned earlier the GA is capable of solving more than one problem in more than one dimension by changing more than one parameter for each problem. Here I have changed the following two parameters for the Neural Network X-or problem.

1. Weight Updates

2. Topology (Number of Neurons in Hidden Layer)

As Multiple Neural Networks are created or evolved using the GA the solution converges to an optimal one that improves on the Neural Networks solution to the X-or.

This is like a form of benchmarking and if you read around you will see that

different type of Neural Networks can be used for a variety of different problems.

An additional change that I introduced to this type of non-linear search method was to change the GA into a hybrid GA that uses an additional search method known as Simulated Annealing.

Borrowed from the steel industry basically SA changes the properties of a search operator by gradually decreasing a new parameter used to produce the solution - Temperature. As the Temperature (Artificial in this case) is slowley reduced as with steel the properties of the solution converge to a fixed state. If this fixed state improves on a current best solution it is conserved and used to guide further Annealing.

In this case the Hybrid GA uses this solution to guide its evolution improving on its search for an Optimal Solution.

Here are some graphs to illustrate how Simulated Annealing has improved the GA's ability to slect the RIGHT topology for the problem.

(Fig 5 Error Curves for SA mediated v's Non-SA mediated GA)

(Red-Line is Non-Meditaed Green and Purple are SA-Mediated)

(Fig 6 Energy Map showing the effects of Topology search by GA for a Neural Network)

In conclusion it seems clear that using Local Search methods such as the Simulated Annealing technique alongside Genetic Algorithms that are good at solving Multiple Problems in Multiple dimension to build varied model free neural network archetectures is a coherant framework to move forward with in building programs that can do more.

Also it is an area that with some application of programming can be a rewarding pursuit for anyone wishing to gain ground in AI programming.

Dont be restricted to using off the shelf AI packages as this may not produce the same answers in AI also dont be daunted by the complexity of most Machine Learning techniques as with the right reading around and a minimum of theory writing Algorithms can be considered a creative pursuit open to anyone who can develop some basic skills in programming.

Here is the full code for running the GA download it and run it on a Linux system with pthreads installed:

Use this to compile it:

g++ -o xor_ga_24 xor_ga.cc -lpthread

GAXorNN.tar.gz

The package includes the Neuron Centred Algorithm

Skills required:

> Basic programming in C/C++

> Mathematics GCSE upwards

> Ability to read and understand Basic AI concepts

Start simple:

1. Data inputs and outputs

i) Manufacture your own data

ii) Normalise it - Make sure it stays within a range of numbers usually 0,1

iii) Read and write functions (See earlier Jpeg read)

2. Use Benchmarking to test basic function of Algo

i) X-or and Non-Linear Algebra like the Schaefer

ii) Dont be scared of using the simplest test case first 1/x^2

3. Initialise your Variables

i) Use the rand() function for starts (Easy)

ii) Use more advanced Randomisation techniques (Experiment)

iv) Learn how to SEED your RNG e.g srand(time(NULL))

4. Use Classes to define OBJECT's within your algorithm

i) Each OBJECT has its own variables PUBLIC or PRIVATE

ii) Each OBJECT can be called more than once e.g Neuron

iii) OBJECTS ideal for Multi-threading GA's

5. Write coherant functions for each OBJECT

i) Learn to apply functions to different OBJECTs e.g Layers, Neurons, Individuals

ii)functions can use the same parameters and objects included within the main class of the program

iii) Use Pointers to transfer large data between functions

6. Watch out for Memory leaks

i) As the program becomes larger memory becomes important.

ii) develop a technique for finding bugs in your program

iii) make sure during initialisation that each array / object has enough memory allocated

7. Start simple.

8. Another way to improve Bug detection

i) Shutdown various parts of the program loop to isolate the bug

ii) Just done this with a Program to find problem.

iii) Use Git and/or keep track of your version history - if the version your working on develops a glitch compare it to previous working versions.

I will upload my GA at some point (Its got lots of good ideas in it)

Neural Network uses dynamic Coefficents - that means Learning Rate etc is changed with each iteration depending on the error.

Read Seed Army

Follow @ITsol4u

Tweets by ITsol4u